Is Prompt Design Over?

About two weeks ago, OpenAI announced InstructGPT, these are a series of new engines which have been fine tuned on both safer, more accurate, as well as higher quality GPT-3 completions. I encourage you to check out the research paper which details a lot of the process they used to fine tune the language models.

Ever since the announcement, I have been obsessed with the impacts, future role, and shape of what we currently know as prompt design:

To be honest, this question has been driving me crazy … so, I asked everybody openly what they thought and I got a lot of really good feedback on the Twitter threads above.

My friend Fred had some great points which I loved:

I also got more feedback privately about prompt design being just a single step in the whole process. When it comes to commercializing a GPT-3 application, you may need to create a whole web app around it, which requires some understanding of prompt design.

However, I still feel many questions remain unanswered. To start with, prompt design has many considerations such as per token cost efficiency, your use of imaginative language, your ability to produce completions which match a tone you’re looking for, your curation of high quality examples, the reliability of your completions, as well as the overall utilitarian value of your GPT-3 use case. Primarily, great prompt design is about your ability to, “trick” a large scale language model like GPT-3 to act and perform tasks in the desired ways you want. I’ve joked in the past that professional GPT-3 developers should call themselves GPT-3 Prompt Whisperers. With this in mind, how much, “tricking” will we need to do in the future?

At the same time, if we follow this vector of technological advancement outlined in the OpenAI Scaling law papers, how much more, “tricking” will we need to do? By the end of this decade, if we have far cheaper, more powerful models, will we even need to worry about things like per token cost efficiency?

In a previous podcast, I talked about InstructGPT’s inherent usability, especially for people using GPT-3 for the first time. The magic moment for first time users is that they don’t need to know prompt engineering to get GPT-3 to do meaningful things. This level of convenience for this important group of users could help them see the value of GPT-3 a lot sooner and could lead to a greater amount virality and growth of the community.

I not only think InstructGPT could lead to greater word of mouth, I even think it could be the preference and engine series of choice for most new GPT-3 Developers. I don’t think they will want to trick GPT-3 to do anything. It should just … well, understand. Since the latest batch of GPT-3 users will be accustomed to better, more intuitive instruct based models, they may develop an intuition which expects this kind of behaviour from the models in the future and they may find the older models outside of the instruct series (what I call Davinci Classic) to be daunting.

It’s worth mentioning that in my last podcast, David Shapiro, a significant OpenAI community contributor brought up a great point around fine tuning. He mentioned that fine tuning only requires input and output examples, with the absence of a prompt template altogether, which I thought was an incredible point. Although it would be far more work, in theory, you could skip prompt design and jump straight to fine tuning and get the reliable sorts of GPT-3 completions you want. At the same time, I suspect most consumer-facing GPT-3 commercialized applications (outside of known instruct series use cases) may require fine tuning anyways.

On the other hand, I’ve spoken to others in the community and they believe that Prompt Design is just getting started - we are barely scratching the surface of what’s possible:

For a while now, I have even spoken endlessly about the names we have even chose to describe this new, emergent phenomenon of prompt design. Since last year, I have been arguing time and time again about my hatred of the term prompt engineering. Particularly I wrote:

As language models get better overtime, in theory, they should require less prompting and a lot less, “engineering” as the years go by. The AI models should be able to understand what we want to happen with a lot less hand wringing or training on our part. Prompt engineering itself may be a temporary band-aid solution and term we are just throwing out there until the language models get better and entirely human-like.

This is why, with the introduction of the instruct series, I believe we are starting to see the decline of so called, “Prompt Engineering”. I’ve been working on a new video tutorial for beginners on GPT-3 and I realize that in the past, learning GPT-3 essentially meant learning prompt design. Now, with instructGPT, I think prompt design may be an important module, but not necessarily a requirement especially for first timers. Obviously, you are still technically writing a prompt with InstructGPT, but I think mainly you’ll be giving it some examples and using descriptive language. The point is, you’ll have to do a lot less “tricking” overtime to make it understand what you want and to do things reliably - this fundamental difference is why I think we’ll see a decline in the discipline and art form of Prompt Design.

Also, yes, you could say you need to know prompt design to integrate GPT-3 into your web application and feed in values inputted by a user, but really, back in the day, we would just call this basic programming string manipulation :)

As a business decision, OpenAI switching over to InstructGPT as their default engine says a lot about their confidence in fine tuned, aligned models like this going forward. Not only does this add to my case that prompt design may become a lot less important, I also think it demonstrates, at least if your plan is to use OpenAI products exclusively, that we may not have a choice going forward either if they decided to remove the older engines.

To reiterate, as language models continue to become more powerful, reliable, and cheaper, I think the importance of prompt design will decline overtime. At some point in the near future, this whole topic could very well be an appendix item or even a footnote. Instead of being the core and lifeblood of language model application development, it could very well be viewed as a temporary point in history until the language models matured.

So, are there any remaining advantages to using Prompt Design today? Is there any reason to write a prompt from scratch at all using Davinci Classic? To start with, I think after you’ve tested out your use case with InstructGPT, if you are not happy with its results even after a few shot examples, it may be worth writing a prompt with Davinci classic to see how it performs instead. I believe there are some downsides to InstructGPT, like how it was fine tuned, which may adversely affect your GPT-3 use case. So, in this regard, I’m saying if you crave more control over the engine, want to compare, are not happy with your current results, and are yourself an intermediate GPT-3 user, I think it makes sense at that point to try your use case outside of the Instruct series. Overall, I think I would describe this current situation of which GPT-3 engine to use akin to driving a manual vs. automatic car.

The larger thing I think we’re observing is that using DaVinci Classic and leaning heavily on Prompt Design is now one of the many decent options you have to get GPT-3 to do stuff. You can use InstructGPT, fine tuning, or any of the older engines depending upon what you’re trying to do. I want to say explicitly, I think it’s also OK if you simply prefer using Davinci Classic, however, all I’m saying is that I believe the community preferences as well as the language model technology itself will trend towards something intuitive like InstructGPT overtime. So, you will likely be swimming against the current on this one.

What does this mean for teaching GPT-3 to new users? Well, I think that it’s a no brainer to start first timers off with InstructGPT, and then show them something like Davinci Classic and explain to them the differences as a result of InstructGPT’s clever fine tuning. For a really thorough course, you could start with Davinci Classic and teach prompt design, this would be in order to make a student appreciate something like InstructGPT even more. I’m not sure if this would be overkill - almost like you are learning from first principles or something - but this could be an educational arc which could lead to better GPT-3 app developers.

It’s worth saying, personally, I’m conflicted about this whole decline thing because I do believe prompt writing is a real skill. I’ve noticed some people actually have a knack for writing great GPT-3 prompts. They are capable of getting GPT-3 to do things I never would have imagined and their command over the written word is far superior to mine, regardless of the technological experience I may be bringing to the table. So, I’m really sad to see this immediate decline of such a young and emerging art form.

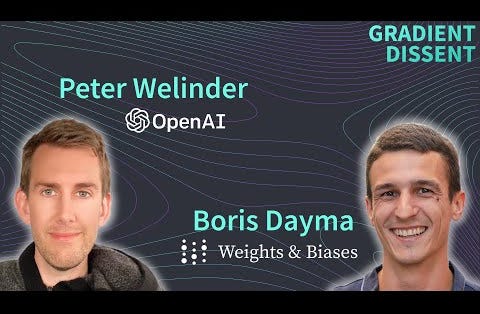

To put a lid on this whole debate, since writing this article, Peter Welinder, who is the VP of Product & Partnerships at OpenAI, has confirmed that Prompt Engineering may be in decline.

At 25:07, he said that prompt design is:

a little bit less of a thing now than it was like a year ago ... and my hope is that it becomes less and less of a thing, and it becomes much more, almost, interactive

To close out this entire discussion, broadly, I wanted to ask an even more important question. What is after the age of prompt design? How will the art form of GPT-3/LLM app development evolve going forward? What will new users who are not held back by learning something like prompt engineering come up with? I think with more usable and more powerful language models, perhaps, the art form will put a greater emphasis on the originality of the GPT-3 use case itself, in areas nobody has ever thought of before. It could also go towards a greater focus on collecting highly specific fine tuning datasets and the reliability of the completions. Perhaps, we’ll see an interest in more advanced prompt architectures grow as well. I’m, honestly, really excited to see this more unconstrained version of the GPT-3 development community going forward.