Prompt engineering is a real phenomenon. If you don't know, it's basically coming up with clever text based scripts to make GPT-3 do what you want.

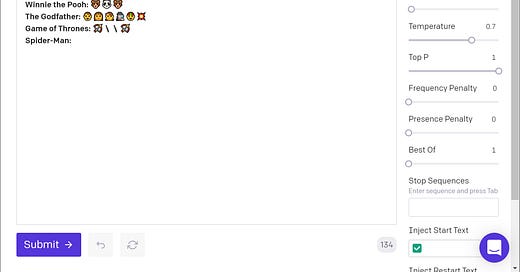

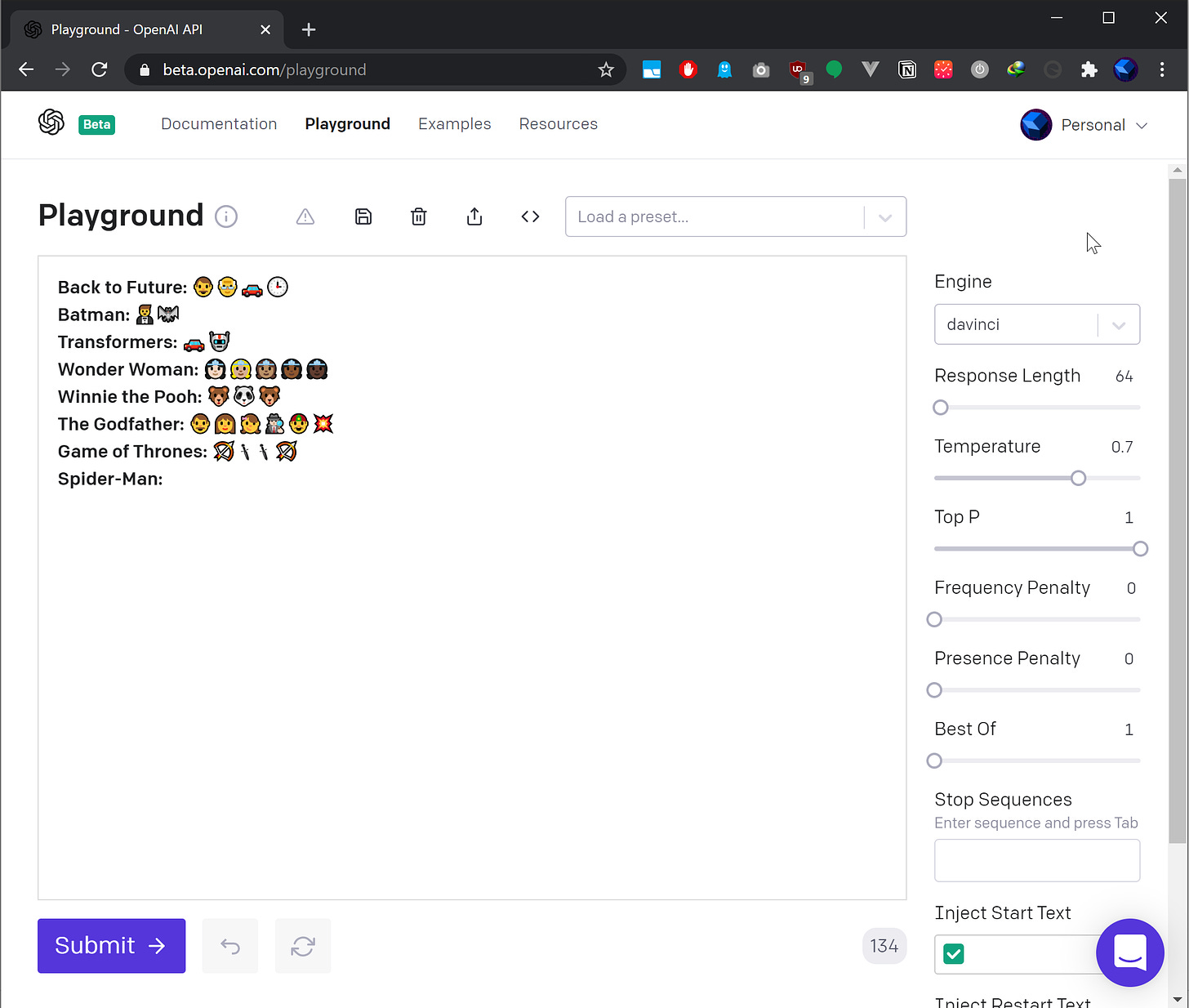

For example, here's an example prompt to get GPT-3 to turn a movie title into emoji descriptions:

Back to Future: 👨👴🚗🕒

Batman: 🤵🦇

Transformers: 🚗🤖

Wonder Woman: 👸🏻👸🏼👸🏽👸🏾👸🏿

Winnie the Pooh: 🐻🐼🐻

The Godfather: 👨👩👧🕵🏻♂️👲💥

Game of Thrones: 🏹🗡🗡🏹

Spider-Man:<GPT-3 would auto complete output here>

But my problem with prompt engineering is simply the name we've given it. I'm not sure where it came from, I'm assuming it has got some machine learning basis behind it, but it's a very intimidating sounding name.

Sure, you have to think about character limits and you have to be clever with GPT-3 to get it to do what you want, so, it's not that straightforward and it does require making some design decisions. Also, it can become complex as you combine different prompts in parallel and you need to commercialize the prompt reliably (and safely). However, is prompt engineering really engineering?

Is it really as complex as other branches of engineering? Broadly speaking, you often hear criticism from people like, “software is not engineering” or that “computer science is not a science” because it has the word, “science” in the name. This is debatable, maybe, but I don’t think it’s crazy to say that prompt engineering is no where near as hard as software engineering or CS.

Here’s the thing - our current understanding of prompt engineering is that it’s all about using natural language (ie. usually English) to describe a task, sharing some examples, pushing a button, and watching AI do stuff for us. This is as as straightforward as a process can get, a child with no formal engineering training could do this, so why are we calling it engineering?

I think part of the problem is that there are very few labels that fit this, “example based programming paradigm". It’s not quite code and it’s not quite english, so we call it a “prompt”. At the same time, it’s very straightforward but could get more complicated as you commercialize, so, we call it, “engineering”? I guess. Maybe it’s a safety thing, but safety and risk is not a concept exclusive to engineering that only engineers can inherently understand, think through, and implement in the real world.

I’m sure people said the same thing about web development, or desktop software, or every layer of the technical stack. Something along the lines of, “how is this even engineering? It’s so straightforward”! And sure, maybe this article will come to haunt me in the future if prompt engineering ends up being something with far more depth and complexity, expanding outwards into various sub-fields. But this time, I genuinely feel like things are different. As language models get better overtime, in theory, they should require less prompting and a lot less, “engineering” as the years go by. The AI models should be able to understand what we want to happen with a lot less hand wringing or training on our part. Prompt engineering itself may be a temporary band-aid solution and term we are just throwing out there until the language models get better and entirely human-like.

Labels don’t matter early on, but as the GPT-3 community grows, I think it’s worth bringing some consideration into the names we are giving things. I was recently at a clubhouse event and I hated telling people who don’t know anything about GPT-3 to look into, “prompt enginering” since they were curious about how to make it do stuff. Prompt Engineering sounds way more superflous than what the job actually is and honestly, it just sounds intimidating.

At the same time, the name sells GPT-3 short. The name doesn’t give it the credit it deserves. GPT-3 can communicate in natural language, multiple languages spoken around the world, and often just, “get” what you mean and know what you want it to do. The breakthrough of GPT-3 is that it doesn’t require advanced kinds of AI trickery and engineering to make it work. So, why do we stick with this name?

I also want to make this point quite clear because I think it’s important - I think the name particularly scares off non-technical people from diverse backgrounds because it makes the whole process of using GPT-3 sound a lot harder than it actually is. To them, it sounds like they still can’t participate in AI unless they are already Silicon Valley engineering insiders. If we want to increase the diversity in our community and bring in people from all walks of life (especially those who are non-technical), I think a great step forward would be calling prompt engineering something else, something more user friendly and inclusive which actually reflects the inherent usability of GPT-3 anyways.

I don’t have a name suggestion to propose to you here, but I do think this idea has some merit and is worth futher discussion. It’s not too late to change the arbitrary labels we’ve given things, we’re still very early on. At the same time, we have some real opportunity to do some good here, so, it’s worth a shot.

It's not any more intimidating to me than terms like social engineering, which this feels closer to. I think it captures the feeling pretty well of coaxing GPT-3. Yet because I view GPT3 as a "search engine for things that haven't been written yet", it is probably closer to search query optimization. I think "prompt engineering" will come into play more for power users while casual usage of the technology won't worry so much about careful tuning.